Securing AI Ecosystems: Cybersecurity Measures for AI Implementation

Introduction

As organizations rapidly adopt artificial intelligence (AI), cybersecurity risks grow in complexity and scale. AI systems—powered by vast datasets and interconnected algorithms—are prime targets for cyberattacks, data breaches, and adversarial exploitation. A single vulnerability can lead to financial losses, regulatory penalties, and reputational damage.

For C-level leaders, securing AI ecosystems is no longer optional—it’s a strategic imperative. This article explores critical cybersecurity threats in AI deployment and provides actionable measures to safeguard AI systems.

Key Cybersecurity Threats in AI Implementation

1. Data Poisoning & Model Manipulation

Attackers can corrupt training data or manipulate AI models to produce incorrect outcomes. For example, injecting biased data into a fraud detection system could lead to false approvals or rejections.

Mitigation Strategies:

– Implement data integrity checks and anomaly detection in training datasets.

– Use adversarial training to harden AI models against manipulation.

– Apply model versioning and digital signatures to prevent unauthorized changes.

2. Adversarial Attacks on AI Models

Hackers can exploit AI vulnerabilities by feeding deceptive inputs (e.g., subtly altered images or text) to trick models into making wrong decisions.

Mitigation Strategies:

– Deploy robust AI models resistant to adversarial perturbations.

– Use input validation and sanitization to detect malicious inputs.

– Conduct penetration testing specifically targeting AI decision boundaries.

3. Model Theft & Intellectual Property (IP) Breaches

AI models are valuable assets. Attackers may steal proprietary models via reverse engineering, API exploitation, or insider threats.

Mitigation Strategies:

– Apply model obfuscation and encryption to protect AI algorithms.

– Restrict API access with rate limits and authentication controls.

– Monitor model usage patterns for unusual activity.

4. Privacy Risks & Data Leakage

AI systems often process sensitive data (e.g., customer PII, financial records). Unauthorized access or leaks can violate GDPR, CCPA, and other privacy laws.

Mitigation Strategies:

– Implement differential privacy techniques to anonymize training data.

– Use federated learning to keep data decentralized and minimize exposure.

– Enforce strict access controls and data encryption at rest and in transit.

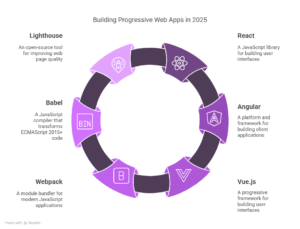

5. Supply Chain & Third-Party Risks

Many AI systems rely on external libraries, cloud services, and vendor APIs, which can introduce vulnerabilities.

Mitigation Strategies:

– Conduct vendor security assessments before integration.

– Maintain an AI software bill of materials (SBOM) to track dependencies.

– Isolate high-risk third-party components in sandboxed environments.

Best Practices for Securing AI Ecosystems

1. Adopt a Zero-Trust AI Security Model

– Verify every access request to AI systems, even from internal networks.

– Implement multi-factor authentication (MFA) and least-privilege access

2. Continuous Monitoring & Threat Detection

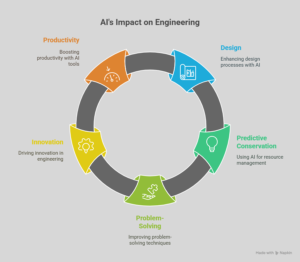

– Deploy AI-powered cybersecurity tools to detect anomalies in real time.

– Establish AI incident response plans for rapid containment.

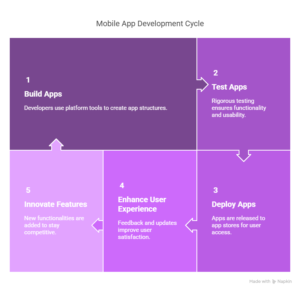

3. Secure the AI Development Lifecycle

– Integrate security into AI development (DevSecOps for AI)

– Conduct red teaming exercises to simulate AI-specific attacks.

4. Compliance & Governance

– Align AI security practices with NIST AI Risk Management Framework, ISO 27001, and SOC 2.

– Ensure auditability and explainability for regulatory compliance.

5. Employee Training & Awareness

– Educate teams on AI-specific threats (e.g., prompt injection in LLMs)

– Foster a security-first culture in AI development and deployment.

Conclusion

AI cybersecurity is a dynamic challenge requiring proactive strategies. By implementing robust data protections, adversarial defenses, and strict access controls organizations can mitigate risks while harnessing AI’s transformative potential.

C-level leaders must prioritize AI security investments, collaborate with cybersecurity experts, and stay ahead of evolving threats. A well-secured AI ecosystem not only prevents breaches but also builds trust with customers, regulators, and stakeholders.

Call to Action

– Audit your AI systems for vulnerabilities today.

– Integrate AI security into enterprise risk management

– Partner with cybersecurity firms specializing in AI defense.

By taking these steps, businesses can deploy AI confidently, knowing their ecosystems are resilient against cyber threats.