In this age where the world is AI-driven, the requirements for robust models do not typically go hand-in-hand with that of data privacy. Most machine learning models will rather employ data that is centralized, collecting a lot of user data on a centralized server and utilizing it to train the model. But then, if that centralization approach happens to compromise data security and privacy?

Step in Federated Learning (FL) an innovative technique that allows data to be learned by AI models without ever leaving your device.

What is Federated Learning?

Federated Learning is a decentralized machine learning approach that learns an algorithm on a large number of decentralized devices or servers based on local samples of data, yet never shares data. Basically, your device (your phone, for example) learns the model from your local data, and only model updates (e.g., gradients or weights) are moved to a central server—not your data.

This was achieved by Google first in 2017 in a bid to improve predictive keyboards like Gboard without storing all of their keystrokes on their servers.

Privacy by Design: An Overview

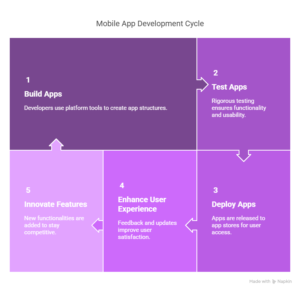

Following is a hypothetical explanation of the FL process:

A master server sends a first model to a set of user terminals.

Every device educates the model on local information (e.g., texts, images, or behavior patterns).

Rather than transmitting raw data, every device transmits the model’s updated parameters to the server.

The server combines the updates to help in enhancing the global model.

The cycle continues continuously refining the model without compromising user privacy.

Using approaches like Secure Aggregation and Differential Privacy, FL guarantees the exchanged updates are not leaking sensitive data.

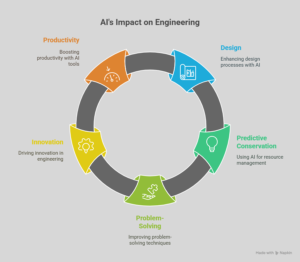

Why Federated Learning Matters

Improved Data Privacy

The biggest win is privacy. Information stays on the device, reducing the risk of breaches and regulatory hassles (e.g., GDPR or HIPAA compliance).

Reduced Latency

Training is locally performed, which may allow for nearly real-time learning and personalized experience without the cloud.

Scalable AI

With billions of internet-connected devices, federated learning taps into a massive, decentralized data reservoir—allowing for richer, more varied training environments.

Is There A Trade-Off With Accuracy

One would intuitively assume that training on non-uniform, decentralized data would decrease model performance. But developments in federated optimization algorithms (such as FedAvg) have demonstrated that models trained under FL are capable of outperforming or even outperforming centralized models particularly if personalization is applied.

Besides, FL is not a panacea. It shines in cases like:

- Predictive text and keyboard suggestions

- Healthcare applications (where information is sensitive)

- Smart objects and IoT devices (e.g., wearables, sensors)

- Financial applications (mobile device-based fraudulent detection)

Challenges and Limitations

While promising, FL is not without some possible pitfalls:

Communication Overhead: Keeping many devices updated can be bandwidth-intensive.

System Heterogeneity: Hardware is heterogeneous in power and connectivity, and training is inconsistent.

Security Risks: Although raw data is not shared, compromised updates or attacks by opponents can be damaging. Latest studies are addressing such challenges with methods such as federated transfer learning, homomorphic encryption, and robust aggregation.

The Future of Federated AI

Federated Learning isn’t a technical innovation, it’s a shift in paradigm for how we think about data, privacy, and intelligence. While regulations are getting stricter and customers are demanding more control, FL offers a realistic path forward: one that balances machine learning and privacy. In a trust-based world, federated learning is emerging as a cornerstone of ethical AI.