CTO Perspective: Evaluating AI Solution Scalability and Flexibility

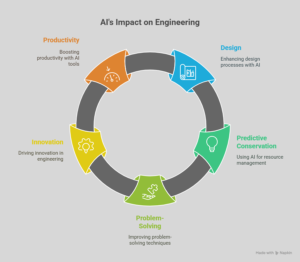

As artificial intelligence (AI) continues to transform industries, Chief Technology Officers (CTOs) face the challenge of selecting AI solutions that not only meet current business needs but also scale and adapt to future demands. Scalability and flexibility are critical factors in ensuring long-term success, avoiding costly re-architecting, and maintaining a competitive edge.

Why Scalability and Flexibility Matter in AI Solutions

AI initiatives often start as small pilot projects but must expand to handle increasing data volumes, user demands, and evolving business requirements. A scalable AI solution can grow seamlessly, while a flexible one can adapt to new use cases, regulatory changes, and technological advancements.

Key Considerations for Evaluating AI Scalability

1. Infrastructure & Compute Efficiency

– Can the solution leverage cloud, hybrid, or on-premise deployments efficiently?

– Does it support distributed computing (e.g., Kubernetes, serverless) to handle spikes in demand?

– Are there optimizations for GPU/TPU acceleration to reduce costs at scale?

2. Data Handling & Processing

– Can the AI model process real-time and batch data efficiently?

– Does it support incremental learning or continuous training without full retraining?

– Is the data pipeline optimized for high-throughput and low-latency requirements?

3. Model Performance at Scale

– How does accuracy degrade (if at all) with larger datasets or more users?

– Can the model be fine-tuned or updated without downtime?

– Are there automated monitoring and retraining mechanisms?

Key Considerations for Evaluating AI Flexibility

1. Adaptability to New Use Cases

Can the same AI framework be repurposed for different business functions (e.g., customer support, fraud detection, supply chain optimization)?

Does it support multi-modal AI (text, image, video, sensor data)?

2. Integration & Interoperability

Can the AI solution integrate with existing enterprise systems (ERP, CRM, databases)?

Does it support open standards (APIs, ONNX, TensorFlow/PyTorch compatibility)?

3. Regulatory & Compliance Readiness

Can the AI model be easily audited or modified to meet new regulations (e.g., GDPR, AI Act)?

Does it support explainability and bias mitigation features?

Best Practices for CTOs Selecting AI Solutions

1. Start with a Modular Architecture

Prefer microservices-based AI deployments over monolithic systems.

Use containerization (Docker) and orchestration (Kubernetes) for easier scaling.

2. Prioritize Open & Extensible Frameworks

Avoid vendor lock-in by choosing platforms with open-source compatibility.

Ensure the solution allows custom model development alongside pre-built AI services.

3. Test Under Real-World Conditions

Conduct load testing to evaluate performance under peak demand.

Simulate data drift and model decay scenarios to assess adaptability.

4. Plan for Continuous Evolution

Implement MLOps practices for automated model monitoring and retraining.

Ensure the team has the skills to iterate and improve AI models over time.

Conclusion

For CTOs, selecting an AI solution is not just about solving today’s problems—it’s about building a foundation for future growth. Scalability ensures that AI systems can handle increasing workloads without breaking, while flexibility allows them to evolve with business needs and technological advancements. By carefully evaluating these factors, organizations can deploy AI solutions that deliver sustained value and innovation.